A new iProov study reveals a startling truth: almost no one can spot a deepfake. Out of 2,000 participants, only 0.1% could tell real from fake. What once seemed like science fiction is now a reality, with cloned voices and fake faces fueling real-world fraud. So, how to tell if a video is AI generated?

This guide will answer all your questions about spotting deepfakes in 2025. It will explain what deepfakes are, how they’re made, where they appear, and the practical ways to detect them. You’ll also learn about new laws, expert insights, and easy tools to help you stay ahead of digital deception.

What Exactly Is a Deepfake?

A deepfake video is synthetic media that replaces or alters someone’s appearance or voice using artificial intelligence. The word combines “deep learning” and “fake.” Deep learning refers to the neural networks that learn visual and audio patterns to create these convincing forgeries.

Deepfakes can do more than copy a face. They can imitate tone, accent, and gestures. Some even generate full-body animations in real time. Modern models, such as GANs (Generative Adversarial Networks) and diffusion systems, learn by comparing fake and real data until the fake data becomes nearly indistinguishable.

Early deepfakes were clumsy. Faces flickered, voices glitched, and skin looked waxy. By 2025, AI deepfake websites can produce nearly cinematic results. Even an amateur can train an AI on a few photos to create a short, fake clip in just a few hours.

How Deepfake Videos Are Made?

At the core of every convincing fake is an algorithm trained on massive amounts of data. In brief, here’s how it works.

- Collecting data – Thousands of frames or recordings of the target person are gathered from videos, interviews, or social media.

- Training an AI model – The AI analyzes this data, learning how the person moves, talks, and expresses emotions.

- Generating new content – It merges the learned features into new footage, placing the target’s face and voice onto another person’s body.

- Refining the result – Filters fix lighting, remove distortions, and align expressions.

At the center of this process is a system called a GAN. Two neural networks are locked in a state of competition. One generates fake content, while the other judges it. With each cycle, the generator improves, learning to outsmart its opponent and eventually fool human viewers as well.

A similar principle powers voice cloning, which learns someone’s pitch and rhythm to recreate their speech. With enough data, the clone can produce live conversations.

That technical accessibility is why experts now call deepfakes a social rather than purely technical threat.

Why Deepfakes Are Dangerous?

The danger lies in credibility. People trust what they see and hear. An AI-generated deepfake can exploit that trust to spread lies, manipulate decisions, or commit fraud.

- Misinformation and propaganda – Fake political statements spread faster than corrections. False videos of leaders have surfaced before elections in the U.S., Ukraine, and India.

- Fraud and impersonation – In Hong Kong, criminals used a deepfaked video call of a finance executive to steal $25.5 million from a company.

- Non-consensual content – Most deepfakes online remain pornographic. Studies show more than 90-95% involve women who never agreed to appear in them.

- Bypassing security – Some attackers mimic facial or voice recognition to unlock devices or authorize transactions.

Even when exposure follows, the damage often sticks. Once a fake circulates, it becomes part of the public record, eroding trust in real evidence.

Where You’re Most Likely to Encounter AI Fake Videos?

Deepfakes circulate everywhere online. From social media feeds to corporate video calls, AI-generated fake videos are showing up everywhere. They slip into trending TikToks, doctored news clips, and even live meetings. They are blurring the line between entertainment and deception.

Scammers use AI fake videos in phishing emails, social posts, and even live calls. In some corporate fraud cases, employees have joined Zoom meetings with a convincing digital impostor posing as their supervisor.

Political disinformation also thrives on deepfakes. During election seasons, deep fake news targets candidates or twists their words to inflame opinion.

Even legitimate platforms are reacting. Meta announced in 2024 that Facebook and Instagram would label AI-generated videos and images to help users spot them.

Still, not every site follows the same policy. That’s why personal awareness remains essential. When you see shocking footage, verify it through credible outlets. If no reliable source confirms it, assume that it is a manipulation until proven otherwise.

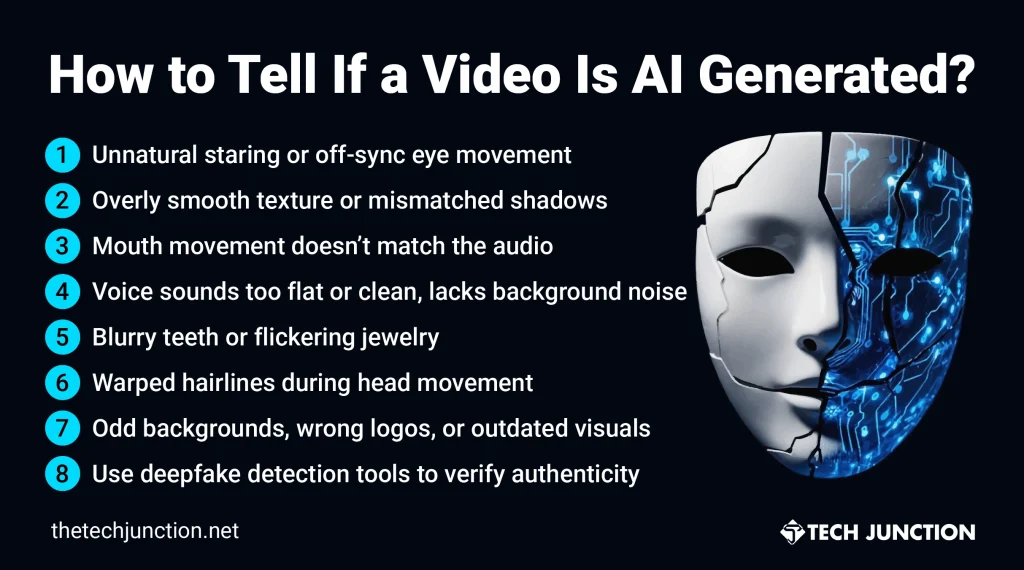

How to Tell If a Video Is AI Generated: Visual and Audio Clues

Spotting deepfakes takes patience and observation. Here’s what to watch and listen for:

1. Eyes and Blinking

Humans blink naturally about 15–20 times per minute. Many fakes forget this, leaving characters with long, eerie stares. Pupil motion or reflections may also appear static or mismatched.

2. Skin and Lighting

Deepfakes often smooth out pores or misalign shadows. Real skin has texture and tiny color shifts. If the light on the face doesn’t match the background, be suspicious.

3. Lip and Voice Sync

Mute the clip and check if the lips match the spoken words. Even advanced models struggle with perfect timing. A slight desync of the mouth movement, occurring before or after speech, betrays fabrication.

4. Voice Tone

Synthetic voices sound too flat or too perfect. They often lack environmental noise such as echoes or breathing. If it feels like studio audio in a casual setting, that’s a clue.

5. Fine details

Teeth may blur, jewelry may flicker, or hairlines may warp during head movement. AI systems still struggle with small, high-contrast edges.

6. Context clues

Does the scene fit reality? Old logos, weather mismatches, or impossible backgrounds hint at reuse. Take a screenshot of the frame and run a reverse image search to determine its origin.

Each sign alone might mean nothing, but several together raise a red flag. When in doubt, pause and verify before sharing.

Best Tools To Detect Deepfake AI Videos

Technology can also help you investigate. Several deepfake detection tools are available for free or are publicly accessible.

- Sensity AI – Sensity AI Uses advanced pattern analysis to detect manipulation in video, audio, and still images.

- Microsoft Video Authenticator –Microsoft uses its Content Credentials system across its AI tools, including Designer, Copilot, Paint, and select models in Azure OpenAI Service, to show when and how an image was created and to disclose that AI was involved

- Intel FakeCatcher –Intel FakeCatcher measures subtle blood-flow signals in a person’s face; real people show natural pulse changes that AI can’t replicate.

- InVID – InVID is a browser plugin popular with journalists for verifying and reverse-searching viral videos.

These tools aren’t perfect. Newer models can outsmart older detectors. The best detection is achieved by combining tools with human judgment. Technology points to anomalies. People decide what’s credible.

Read More: 3 AI Trends Transforming the World of Media & Entertainment

What Are Legal Measures Against Deepfakes?

Governments worldwide are trying to keep pace with the growing threat of AI deception.

Take It Down Act (2025)

In the United States, the Take It Down Act represents a significant step in combating the misuse of AI-generated content. It compels online platforms to remove non-consensual intimate images including deepfakes, within 48 hours of a verified report.

Non-compliance can result in severe fines or even imprisonment, particularly when minors are involved. The law signals a growing urgency to protect individuals from digital exploitation.

EU AI Act (2024)

Europe’s landmark AI regulation sets the global standard for accountability in artificial intelligence. The EU AI Act classifies systems by risk, imposing the most rigid rules on high-risk applications such as deepfakes. It requires all synthetic media to be clearly labeled and holds companies financially responsible for violations.

At its core, the Act seeks to ensure that AI innovation remains safe, transparent, and aligned with human rights.

New Chinese rules mandate visible or invisible watermarks on all AI-generated media. Removing or hiding these marks is illegal. Platforms must flag unlabeled clips as “suspected synthetic”.

Best Practices for Individuals and Businesses

Cybercriminals often exploit urgency, emotion, or familiarity to trick users into sharing information or performing risky actions. Always pause before responding, especially when something feels off.

Knowing how to tell if a video is AI generated is one of the most essential practices to strengthen your digital security. Follow these quick checks:

- Verify unusual requests. If a video call or message asks for urgent action, confirm through another channel.

- Train teams. Companies should include deepfake recognition in security training, just like phishing awareness.

- Use detection apps. Run questionable clips through trusted scanners before reacting or reposting.

- Check provenance. Prefer content from verified accounts or official media. Avoid forwarding anonymous videos.

- Protect your own media. Add digital watermarks or signatures to your original videos so they can’t be misused.

- Stay informed. Follow credible tech news and reports from organizations like Sensity.ai, MIT Tech Review, or Reuters Fact Check.

By combining habits and tools, you make it far harder for fabricated media to mislead you or your organization.

Read More: AI Trends for 2025: What’s Next for Artificial Intelligence?

Wrapping Up: How to Tell If a Video Is AI Generated Is Key to Digital Security

Truth in the digital age is no longer self-evident. Images can lie, voices can deceive, and videos can imitate reality with alarming precision. But being fooled isn’t inevitable.

After reading this article, you know how to tell if a video is AI generated. You can spot AI-generated videos by watching for lighting inconsistencies, lip-sync mismatches, or unrealistic contexts. These subtle clues help you question suspicious content before you believe or share it. Use verification plug-ins and deepfake detection tools to strengthen your defense against manipulated media.

Governments are tightening laws, and researchers are improving algorithms. Still, no system replaces human skepticism. The most reliable deepfake detector remains a curious, cautious mind.

So, next time a video seems too wild, too perfect, or too shocking to be true, take a breath, check the source, and verify before you share. The truth deserves that extra moment.

FAQs: How to Tell If a Video Is AI Generated

1. Are deepfakes always easy to spot?

No, today’s AI generated deepfakes are highly realistic and often require careful scrutiny or AI tools.

2. What tools can help detect AI-generated videos?

Specialized deepfake detection software and browser plug-ins can highlight suspicious content.

3. Why are AI-generated videos dangerous?

They can spread misinformation, damage reputations, or manipulate public opinion.

4. Can social media platforms detect deepfakes automatically?

Platforms like Meta announced in 2024 that Facebook and Instagram would label AI-generated videos and images to help users spot them.

5. How to tell if a video is AI generated in 2025?

Look for unnatural facial movements, mismatched lip-sync, or inconsistent lighting or use a deepfake detector.

The Tech Junction is the ultimate hub for all things technology. Whether you’re a tech enthusiast or simply curious about the ever-evolving world of technology, this is your go-to portal.